News

Our reality is getting more fake: how could forensic investigation detect deepfakes?

17 May 2021

Article by DuckDuckGoose and Foclar

Deepfakes are hyper-realistic fake images or videos, generated or manipulated by artificial intelligence (AI) , in which (non-)existing people appear to do and say things they never have. University College London [1] has ranked deepfakes as the most serious AI crime threat. Coupled with the ever-increasing accessibility of deepfake technology and the speed of internet platforms, convincing deepfakes can quickly reach millions of people and have a devastating impact on our society. Why? Think for example of delivering fake evidence to the court, sockpuppets [2], blackmail, terrorism, or fake news in which trusted political figures spread disinformation causing social disruption.

Deepfake generation

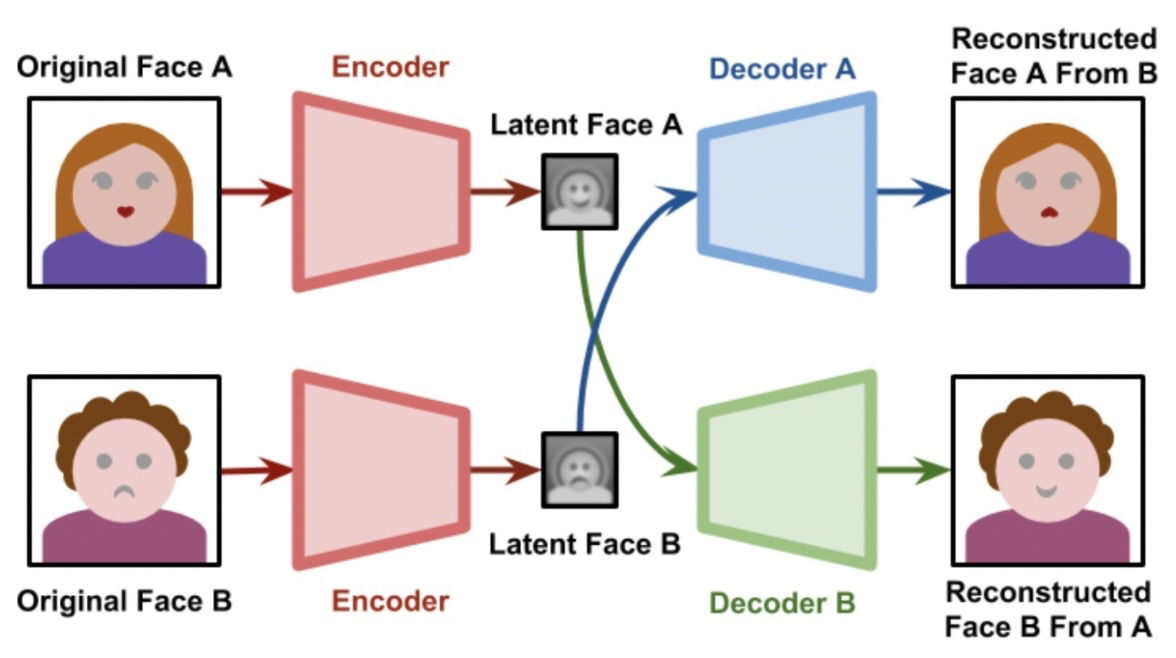

Deepfakes have become popular due to the easy-to-use ability of their applications to a wide range of users with or without computer skills. To this end, deep learning techniques have been applied representing complex and high dimensional data. The first instance of deepfake generation, i.e. FakeApp, was developed by a Reddit user deploying autoencoder-decoder pairing structure [3]. This method uses an autoencoder that extracts latent elements of face images and a decoder which reconstructs the face images. To swap faces of two target images, two encoder-decoder pairs with the same encoder are needed.

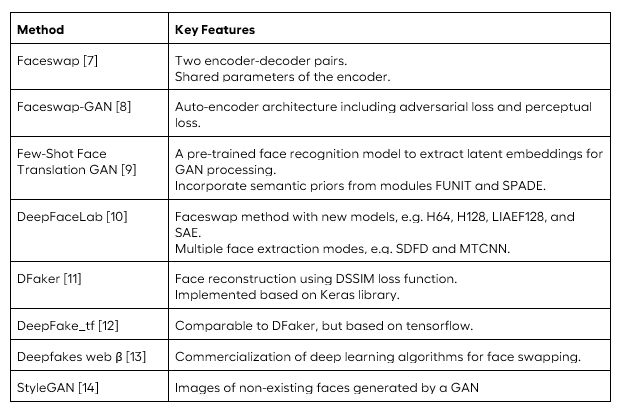

By implementing adversarial loss and perceptual loss to the encoder-decoder architecture of VGGFace, an enhanced version of deepfakes based on the generative adversarial network (GAN) [5] was proposed. The VGGFace perceptual loss improves deepfake creation in a way that eye movements seem to be more realistic and consistent with input faces. In addition it contributes to smooth out artefacts resulting in higher quality output images. To make face detection and face alignment more stable the multi-task convolutional neural network (CNN) from the FaceNet implementation [6] is introduced. Notable deepfake generation methods are summarized in table 1.

Malicious applications of deepfakes and government interventions

The first deepfake video emerged in 2017 in which the face of a celebrity was swapped to that of a porn actor [7]. Since then, technology has been used to influence public opinion. An example is the journalist Rana Ayyub. Her face was morphed into a pornographic video as if she had acted in it. This virulent attack came soon after she campaigned for justice for the Kathua rape victim. Deepfakes could also serve malicious personal incentives. A mother from Pennsylvania allegedly used explicit deepfake videos to try to get her teenage daughter's cheerleading rivals kicked off the team [15].

The case of Malaysia's economic affairs minister Azmin Ali is a remarkable one to take a look at. In 2019, Azmin was involved in a sex tape scandal in which he allegedly had sexual relations with a member of his political party, Haziq Abdul Aziz [16]. Since sodomy is a crime in Malaysia, which carries up to 20 years of imprisonment [17; 18], Azmin tried to evade the consequences by claiming that the video was a deepfake. Although no one has been able to definitively determine otherwise, the public opinion and that of digital forensics experts, among others, have remained divided [19; 20]. Since the public cannot distinguish between which information is real or fake, they are more willing to adopt their predetermined biases [21].

As a result, this had devastating consequences for the reputation and political career of the parties involved. In addition, this has led to a destabilization of the governing coalition and an emphatic rejection of LGBT rights in Malaysia [22; 18]. According to Towes [21], deepfakes not only deteriorate social divisions and disrupt democratic discourse, but also undermine public safety and weaken journalism.

Therefore, governments and law enforcement agencies around the world are taking action to prevent this problem. Investing in technology solutions that can detect deepfakes and develop appropriate legislation to punish producers and distributors of deepfakes are two of the government's most proposed actions [20]. According to Welch [23] a better identification of deepfakes would not only help protect people's right to expression without allowing the distribution of harmful content, but also help (social) media platforms to improve their policies on deepfakes. Besides, appropriate legislation makes it illegal to distribute deepfakes with malicious intent, and create resources for those who have been negatively affected by it [24].

Deepfake detection

But how could the justice system act upon realistic deepfakes and protect our society when the borders between real and fake digital imagery are increasingly fading?

Mandet 2.0 includes a deepfake detection filter that does not only classify whether an image is deepfake or real, but also explains the reasoning behind its classification. The main component in this deepfake detection filter, i.e. DeepFake Detection, is DeepDetector. DeepDetector is a neural network that takes a picture of a person as input and analyses the face seeking for alterations. Subsequently, DeepDetector outputs the probability that a specific face has been altered or generated by deepfake technology.

DeepDetector is developed by DuckDuckGoose [25] inspired by recent advancements in the field of deepfake generation and deepfake detection [26].

Highlighting deepfake artefacts

There are many different types of techniques for generating deepfakes. These deepfake generation techniques leave “artefacts'' behind in images or videos. These artefacts are the result of many face editing or generating algorithms that exhibit artefacts, resembling classical computer vision issues that stem from face tracking and editing [27]. These imperfections enable us to distinguish real from deepfaked material. Deepfake artefacts can include unrealistic facial features or incoherent transitions between frames.

However, current deepfake generation techniques are so good that the generated or manipulated faces look more and more realistic. This results in artefacts which are very hard to spot with the unaided eye. An example of such an artefact is the light reflected in the eyes. The reflection on the two eyes in a picture of a real face will be similar since the eyes are looking at the same thing. However, deepfake images synthesized by GANs fail to accurately capture this resemblance and often exhibit inconsistencies. Examples of these contain different geometric shapes or mismatched locations of the reflections.

DeepDetector leverages the power of artificial neural networks to make the distinction between real and deepfaked footage. To achieve this, DeepDetector pinpoints the location of the artifacts introduced by the deepfake. This is done in the form of an activation map based on the input, giving a per pixel score allowing the user of the system to have an idea where the deepfake left artifacts.

Conclusion

Deepfake technology is an emerging threat to governmental stability. Nonetheless, there is good news; the deepfake infocalypse can be mitigated. Not only is AI technology applicable to create deepfakes, but it can also be used to recognize those deepfakes. Although AI-systems are known to function as a black box, DeepDetector integrated in Mandet 2.0 generates explainable results which give insights into the reasoning behind the binary classification of this neural network for deepfake detection. This way, deepfakes could be detected by forensic examiners, without having to rely fully on an AI-system, but rather by using their expertise in order to anticipate the results generated by DeepDetector in Mandet 2.0.

References

[1] M. Caldwell, J. T. A. Andrews, T. Tanay, L. D. Griffin. AI-enabled future crime. Crime Science, 2020; 9 (1) DOI: 10.1186/s40163-020-00123-8

[2] Liveness.com - Biometric Liveness Detection Explained. (2021). Liveness.com. https://liveness.com

[3] deepfakes/faceswap. (2021). GitHub. https://github.com/deepfakes/f...

[4] Zucconi, A. (2018, 18 april). Understanding the Technology Behind DeepFakes. https://www.alanzucconi.com/20...

[5] Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A. & Bengio, Y. (2014). Generative Adversarial Networks.

[6] D. (2018). davidsandberg/facenet. GitHub. https://github.com/davidsandbe...

[7] Thanh Thi Nguyen, Quoc Viet Hung Nguyen, Cuong M. Nguyen, Dung Nguyen, Duc Thanh Nguyen, Saeid Nahavandi. (2021). Deep Learning for Deepfakes Creation and Detection: A Survey. https://arxiv.org/pdf/1909.115...

[8] S. (2018). shaoanlu/faceswap-GAN. GitHub. https://github.com/shaoanlu/fa...

[9] S. (2019). shaoanlu/fewshot-face-translation-GAN. GitHub. https://github.com/shaoanlu/fe...

[10] I. (2021). iperov/DeepFaceLab. GitHub. https://github.com/iperov/Deep...

[11] D. (2020). dfaker/df. GitHub. https://github.com/dfaker/df

[12] S. (2018). StromWine/DeepFake_tf. GitHub. https://github.com/StromWine/D...

[13] Make Your Own Deepfakes [Online App]. (2021). Deepfakes Web. https://deepfakesweb.com

[14] NVlabs. (2019). NVlabs/stylegan. GitHub. https://github.com/NVlabs/styl...

[15] Reporter, G. S. (2021, 15 March). Mother charged with deepfake plot against daughter’s cheerleading rivals. https://www.theguardian.com/us...

[16] Syndicated News. (2019). Authenticity of viral sex videos yet to be ascertained – The Mole. https://www.mole.my/authentici...

[17] Malaysian cabinet minister denies links to sex video. (2019, 9 December). https://www.euronews.com/2019/...

[18] Jha, P. (2019, 6 August). Malaysia’s New Sex Scandal: Look Beyond the Politics. https://thediplomat.com/2019/0...

[19] FMT Reporters. (2019, 17 June). Digital forensics experts not convinced that gay sex videos are fake. https://www.freemalaysiatoday....

[20] Aliran. Why deepfakes are so dangerous. (2021, 10 June). https://aliran.com/newsletters...

[21] Toews, R. (2020, 26 May). Deepfakes Are Going To Wreak Havoc On Society. We Are Not Prepared. https://www.forbes.com/sites/r...

[22] Cook, E. (2019, 29 August). Deep fakes could have real consequences for Southeast Asia. https://www.lowyinstitute.org/...

[23] Welch, C. (2019, 7 October). Can government fight back against deepfakes? https://apolitical.co/en/solut...

[24] Harrison, L. (2021, 20 April). Deepfakes Are on the Rise — How Should Government Respond?. https://www.govtech.com/policy...

[25] Solutions. (2021). DuckDuckGoose. https://www.duckduckgoose.nl/s...

[26] Fox, G., Liu, W., Kim, H., Seidel, H. P., Elgharib, M., & Theobalt, C. (2020). VideoForensicsHQ: Detecting High-quality Manipulated Face Videos. https://arxiv.org/pdf/2005.103...

[27] F. Matern, C. Riess and M. Stamminger, "Exploiting Visual Artifacts to Expose Deepfakes and Face Manipulations," 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), 2019, pp. 83-92, doi: 10.1109/WACVW.2019.00020.