News

Dehaze

02 August 2023

Article by James Zjalic (Verden Forensics) and Henk-Jan Lamfers (Foclar)

Problem

The most pronounced impact of haze on digital imagery is poor contrast, but it can also change the representation of colours within the environment and cause a reduction in the overall visibility of objects within [1]. Although generally not as problematic as other quality issues, image operations which follow are optimised if it is first addressed. In daytime captures it generally presents itself across entire frames as the distance of the sun from the illuminated environment is so vast that the lighting is consistent. During hours of darkness, the localised nature of artificial light can lead to haze effecting only specific regions within the frames.

Cause

Haze is defined as the suspension in the air of extremely small, dry particles which are invisible to the naked eye and sufficiently numerous to give the air an opalescent appearance [2]. The composition of these particles can vary - dust, water droplets, pollution from factories, exhaust fumes from vehicles and smoke or a mixture [3]. These particles mix with those in the atmosphere, absorbing light particles and scattering them in a manner not observed under normal conditions. The result is a suppression of the dynamic range of colours within a digital image relative to that when the haze is not present [4]. As the root cause is the interaction of light within the environment, it is added during the initial acquisition stage and is never added during transmission. Due to the physical nature of particles, its impact is generally across the entire length of a video rather than a few frames of a small region (notwithstanding recordings of a duration that would mean the suspended particles have dispersed from the capture environment or those in which the camera lens moves away from the suspended particles). Its impact becomes more evident the further from the camera lens the objects are as there are more suspended particles for the light particles to interact with between the object and the camera lens.

Solution

Solutions to reduce haze within forensic imagery are not as heavily researched as those for other imagery operations such as brightening and sharpening, likely owing to the frequency in which it presents itself as a problem. Whilst the vast majority of imagery encountered within imagery forensics could benefit from some brightness and sharpening, the presence, and thus the requirement to reduce haze, is less common. It also differs from traditional enhancement techniques as the degradation is dependent on both the distance of objects and regional density of the haze.

With that being said, the issues haze causes in other sectors of imagery (such as video-guided transportation, surveillance and satellite) means a number of solutions have been proposed. The method can go by various names such as de-fogging, haze removal or de-haze, but all are used to define the operation of reducing the impact of haze on digital imagery.

One of the most simplistic methods is similar to that used in frame averaging, in which the random variation in the haze between frames is exploited, but this is only possible for video recordings (as multiple frames are required to obtain an average) and is not possible for imagery in which the lens is moving.

A second solution is histogram equalisation, a technique that distributes the range of grey tone pixels across a higher dynamic range, thus addressing the narrow range of grey and the reduction in contrast caused by haze. This method can be applied globally or locally, with the local method providing better quality results as it can accommodate varying depths of field. A halo effect can also occur when global adjustments are made as it cannot adapt to local regions of brightness [5].

The blackbody theory takes advantage of the expectation that objects which are known to be black should be represented by pixel values close to zero. Any drift from this value can then be attenuated. This theory assumes the atmosphere is homogenous and that the non-zero black object offset is constant. It would, therefore, not be applicable during hours of darkness or captures over distances that cause local haze effects [6].

Splitting an image into local blocks and maximising contrast to each block [7], utilising machine learning to understand the light and transmission maps (providing that other imagery under normal conditions of the scene is available) and frequency domain filtering [8] are amongst other methods proposed [9].

Implementation

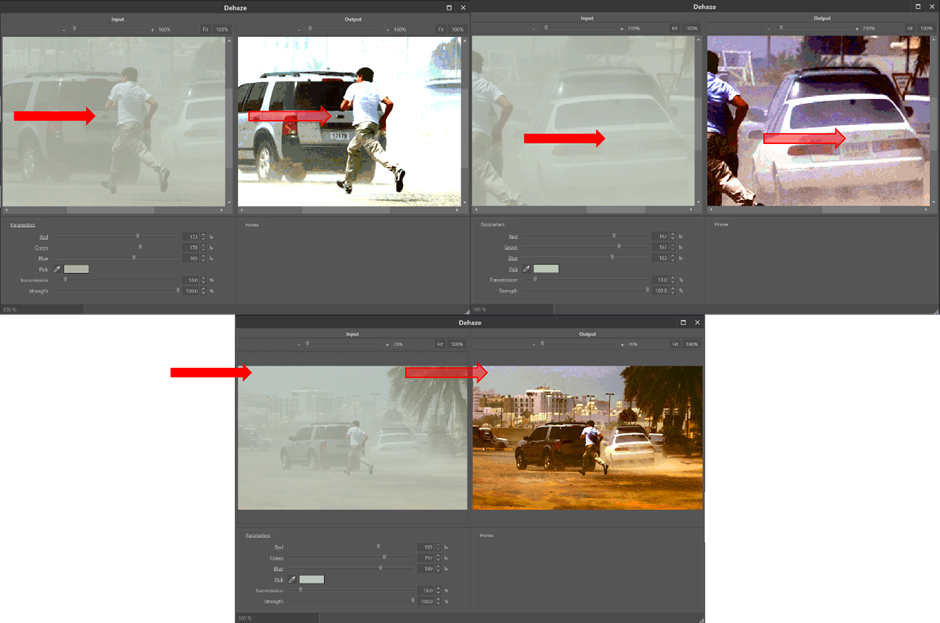

In Impress, the dehaze filter [10] can reduce the effects of mist, smog, and sand storms on images and video frames. Depending on th degree of overexposure, the filter can also have positive effects when trying to suppress halos surrounding lights in the dark. When applying the filter, the atmospheric color has to be selected. The filter detail window (see Figure 1) has a colour picker control, which allows the user to select the colour by clicking on the Pick (pipet) and subsequently in an area of the left image (input image of the filter) which corresponds to the sky. With a click, the values of Red, Green and Blue are set to the values of the pixel that was clicked.

The Transmission parameter is used to tune the distance from the camera that has optimal lighting (see Figure 2 and 3). A lower Transmission value corresponds to the situation where more of the environmental light was lost due to the haze effect before it could be captured by the camera. This is, for example, the case if the object of interest is further away from the camera since, in that case, the light has to travel a longer path through the haze. Finally, the Strength parameter controls the strength of the effect applied to the image.

Selection of the atmospheric colour in a haze is the standard practice in optimising the dehaze filter. However, it can be beneficial to play around by picking different reference colours. Figure 4 shows the result from the dehaze filter, when picking the colour of the back side of the respective cars (solid red arrows), right next to the respective number plates. The bottom image shows the most generally improved image. In this case, the reference color was selected from an area that corresponds to the sky. Although the overall picture is the most appealing, it does not reveal the maximum information about the number plates of the two cars.

Conclusion

Although the issues caused by haze are not as impactful as other quality issues, reduction of such early in the sequence of enhancement operations can optimise those which follow. As enhancements are a chain of individual operations, each of which plays a role in improving the image, one should always seek to address all of the quality issues with appropriate tools. Although changes to contrast may improve the effects of haze, the operation is not dedicated to the specific task of haze reduction. It will, therefore, not be as effective as an algorithm designed specifically for the job of haze reduction and may have an unintended effect on other elements of the imagery, such as the introduction of halos.

References

[1] J. Sumitha, “Haze Removal Techniques in Image Processing,” IJSRD - Int. J. Sci. Res. Dev. Vol 9 Issue 2 2021 ISSN Online 2321-0613, vol. 9, no. 02, Apr. 2021.

[2] World Meteorological Society, “Haze definition.” Jun. 08, 2023. [Online]. Available: https://cloudatlas.wmo.int/en/haze.html

[3] K. Ashwini, H. Nenavath, and R. K. Jatoth, “Image and video dehazing based on transmission estimation and refinement using Jaya algorithm,” Optik, vol. 265, p. 169565, Sep. 2022, doi: 10.1016/j.ijleo.2022.169565.

[4] W. Wang and X. Yuan, “Recent advances in image dehazing,” IEEECAA J. Autom. Sin., vol. 4, no. 3, pp. 410–436, 2017, doi: 10.1109/JAS.2017.7510532.

[5] Q. Wang and R. Ward, “Fast Image/Video Contrast Enhancement Based on WTHE,” in 2006 IEEE Workshop on Multimedia Signal Processing, Victoria, BC, Canada: IEEE, Oct. 2006, pp. 338–343. doi: 10.1109/MMSP.2006.285326.

[6] S. Fang, J. Zhan, Y. Cao, and R. Rao, “Improved single image dehazing using segmentation,” in 2010 IEEE International Conference on Image Processing, Hong Kong, Hong Kong: IEEE, Sep. 2010, pp. 3589–3592. doi: 10.1109/ICIP.2010.5651964.

[7] R. T. Tan, “Visibility in Bad Weather from A Single Image”.

[8] M.-J. Seow and V. K. Asari, “Ratio rule and homomorphic filter for enhancement of digital colour image,” Neurocomputing, vol. 69, no. 7–9, pp. 954–958, Mar. 2006, doi: 10.1016/j.neucom.2005.07.003.

[9] X.-W. Yao, X. Zhang, Y. Zhang, W. Xing, and X. Zhang, “Nighttime Image Dehazing Based on Point Light Sources,” Appl. Sci., vol. 12, no. 20, p. 10222, Oct. 2022, doi: 10.3390/app122010222.

[10] Kim Eun-Kyoung Lee Jae-Dong Moon Byungin Lee Yong-Hwan Hardware Architecture of Bilateral Filter to Remove Haze, Communication and Networking: International Conference, FGCN, p129 Springer (2011).